Xiaomi releases an open-source 7B reasoning model that

(huggingface.co)

from yogthos@lemmy.ml to technology@lemmy.ml on 17 May 18:17

https://lemmy.ml/post/30252047

from yogthos@lemmy.ml to technology@lemmy.ml on 17 May 18:17

https://lemmy.ml/post/30252047

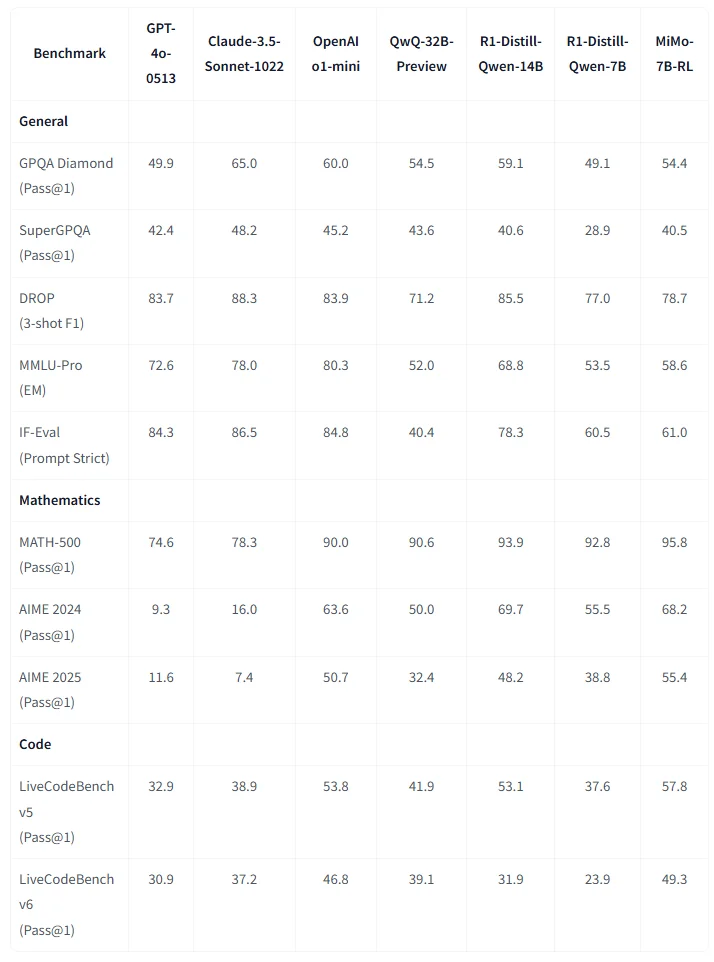

MiMo-7B, a series of reasoning-focused language models trained from scratch, demonstrating that small models can achieve exceptional mathematical and code reasoning capabilities, even outperforming larger 32B models. Key innovations include:

- Pre-training optimizations: Enhanced data pipelines, multi-dimensional filtering, and a three-stage data mixture (25T tokens) with Multiple-Token Prediction for improved reasoning.

- Post-training techniques: Curated 130K math/code problems with rule-based rewards, a difficulty-driven code reward for sparse tasks, and data re-sampling to stabilize RL training.

- RL infrastructure: A Seamless Rollout Engine accelerates training/validation by 2.29×/1.96×, paired with robust inference support. MiMo-7B-RL matches OpenAI’s o1-mini on reasoning tasks, with all models (base, SFT, RL) open-sourced to advance the community’s development of powerful reasoning LLMs.

an in-depth discussion of mimo-7b www.youtube.com/watch?v=y6mSdLgJYQY

threaded - newest