from helloyanis@furries.club to privacy@lemmy.ml on 15 Feb 18:01

https://furries.club/users/helloyanis/statuses/116075716933077295

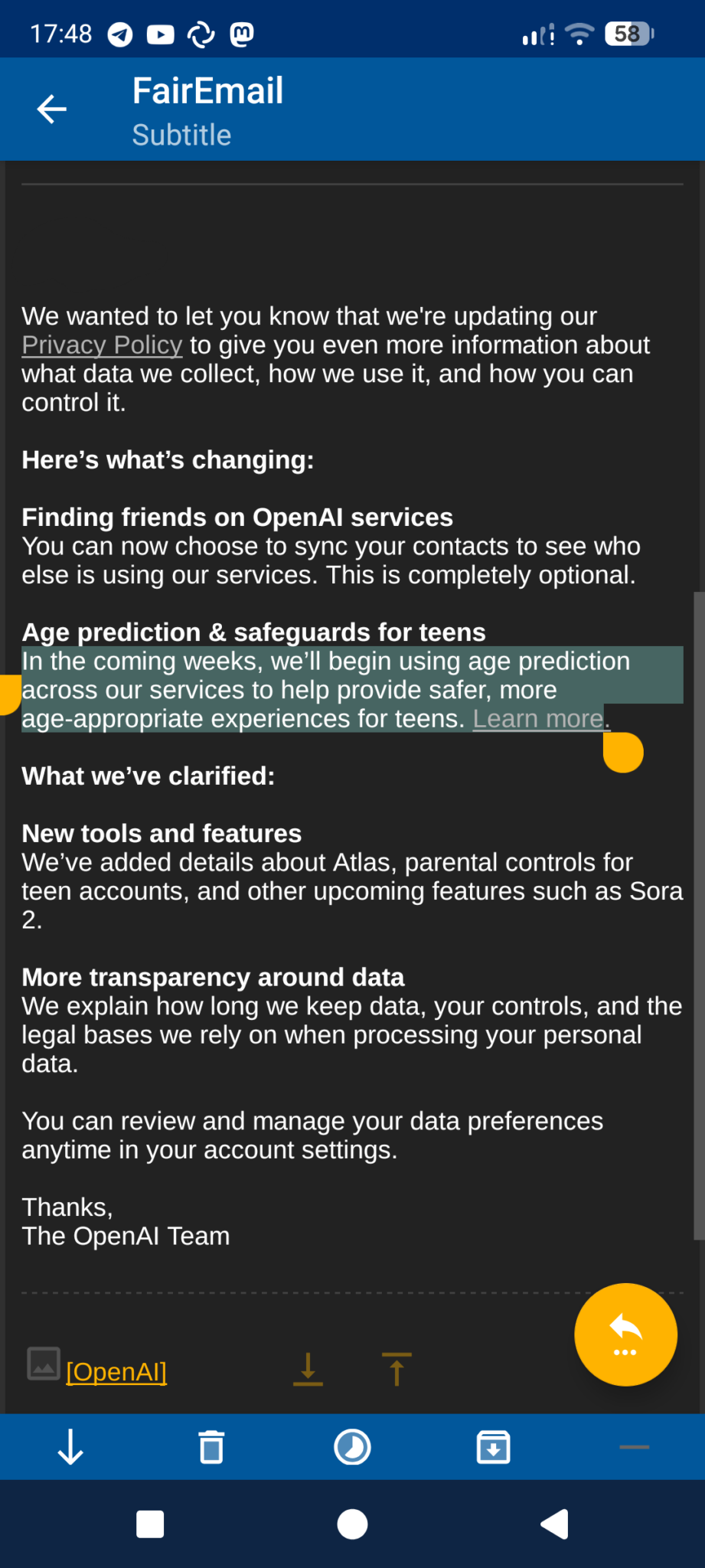

ChatGPT will now predict your age based on how you interact with it

It seems now #openai is doing age estimation too based on this email I received,

After Google who made the “Age signals API” into Android phones, now OpenAI will “predict your age based on how you interact with our services”.

No, I don’t want #chatgpt to analyze my age, thank you very much, (I always used the privage chat mode anyways, whatever impact it actually has on the data they use), so I will now switch to an alternative that doesn’t do that : https://chat.mistral.ai/

Don’t misunderstand me, protecting children is a good idea, but if that implies having to be analyzed by an #AI and having your experience change based on that, I’m heavily against it.

Although it’s convenient, if it starts analyzing my behaviour as well then I guess it will be a good time to start thinking a bit more on my own and only rely on AI as a last resort solution…

Their article https://help.openai.com/en/articles/12652064-age-prediction-in-chatgpt

@privacy@lemmy.ml @privacy@lemmy.world

threaded - newest

I recommend duck.ai as an alternative way of accessing ChatGTP. Is not a perfect solution but it’s better than accessing it straight from the OpenAI website

@ageedizzle I plan on using https://chat.mistral.ai since it's a completely different model that is open source and based in france so regulated by GDPR. If you still use ChatGPT but from somewhere else, it kinda defeats the purpose to switch away from it, in my opinion.

It’s better from a privacy perspective. But yeah, you’d still ultimately be using ChatGTP at the end of the day so another model might be preferable

“Le Chat” lol idk why this looked funny to me. Thanks for the link!

Extra funny when you learn what Chat GPT sounds like in French (chat, j’ai pété)

Of course it’s not weird that “chat” is chat in French, but it’s also cat which is silly

I recommend just searching for what you want and reading the results yourself.

I do too. But if someone really does want to use these tools then there are better and worse ways of doing it

True, that’s a good point

Yes but sometimes using AI like this is so much easier. I find it really useful in troubleshooting tech problems because you can tell it your specific setup and iterate on solutions until something works. So much easier than reading through 1,000 StackExchange threads which approximate your problem

Just make sure you practice safe searching, son. We love you.

Similarly, Proton has Lumo

lumo.proton.me/

That link appears to be broken on my end.

Looks like the hyperlink got messed up. Added the full url

Okay I see the url now thanks. I didn’t know proton had an AI. Seems neat.

For sure. And in that spirit -

Another option for people: pay $10 to get an OpenRouter account and use providers that 1) don’t train on your data 2) have ZDR (zero data retention)

openrouter.ai

You can use them directly there (a bit clunky) or via API with something like OpenWebUI.

github.com/open-webui/open-webui

Alternatively, if you have the hardware, self host. Qwen 3-4B 2507 Instruct matches / exceeds ChatGPT 4.1 nano and mini on almost all benchmarks… and the abliterated version is even better IMHO

huggingface.co/Qwen/Qwen3-4B-Instruct-2507

huggingface.co/…/Qwen3-4B-Hivemind-Instruct-NEO-M…

It should run acceptably on even 10 yr old hardware / no GPU.

Try any model with LMStudio and with the DuckDuckgo search plugin to it. It works better than you might think.

i hate living in the crypto , ai and big social media era

Makes it super easy to know what to avoid though

It’s only getting worse too. The entire US economy is being propped up by AI and crypto. It’s like the sub-prime mortgage craze of the early 2000s. Lots of money going into a system that will never recoup the investment. Either they have to find a way to extract value, or the bottom’s gonna fall out. Just wait for the too big to fail AI and tech bailouts.

So by worse you mean better? I can’t wait for the AI bubble to pop personally.

Did you skip the last sentence?

Sorry I don’t get what you mean by skip the last sentence.

It gets worse until the bubble pops is the idea, which I personally don’t think it will for the US.

Do you mean the AI bubble won’t pop? Currently that seams very unlikely, AI is entirely propped up by investors and they aren’t generating any real profit from consumers. Literally the definition of an unsustainable market.

No, what is meant is that “as long is the AI bubble it will get worse, then it gets better”.

And to that I say “It won’t get any better after the bubble pops”.

There’s simply no incentive in the US from the left side to change anything that I think needs to change and instead has it’s priorities on things that I don’t think will help the underlying issues at all.

Well welcome to US politics I guess.

Also I think things will get better after the AI bubble pops, there will be less AI overhype and AI shoe horning into everything. ram prices will go down, GPU prices will go down and therefore most consumer tech items will also go down.

So if I talk about hemorrhoids enough, it will think I’m old and let me into the pr0n sites! Cool!!!

You would be surprised what health problems people under 18 can have.

Makes sense to me. Use the LLM itself to counter children from using it in ways they aren’t allowed to.

Ha, I thought this was an email from FairEmail at first. That would have been wild. This coming from Scam Altman, not surprising 🤷♀️

Good, maybe people will stop using it and switch to an open-weight Chinese model

If you use the app it’s still sending all of your data to the company that makes the model, I guess it depends if you prefer Chinese spyware or US spyware.

At least they use your data to make a model that’s open weight

Step 1 - don’t use apps on a phone and instead use an appropriate open source sandbox on an actual x86 machine.

The mobile-focused development cycle and its consequences have been a disaster for user agency.

Could you repeat that louder for the folks at the back?

(Also, it’s a statement that’s generally true in ways completely unreleated to AI.)

I don’t really see how using a sandbox protects you from data data collection aside from being able to deny access to things outside of the sandbox, it would still collect data from any way you interact with the app. And using webapps or websites is a viable substitute as well.

(Selfhosting, not using a model that is not hosted on your own hardware)

Oh then yeah for sure.

And using either of them doesn’t guarantee your data won’t get out anyways for products provided by corporations like the recent tiktok outcome showed where a billion dollar company would rather sell out than close down to protect users.

I think if you really care about privacy, you should assume they do this, no matter what they say. By the way, great job on including the image transcript. :)

@treatcover Well, you can run Mistral locally but my laptop is not powerful enough haha

Also, yeah, a small copy paste goes a long way!

guy using chat gpt to ask about anime waifus and the bot confused if he’s 12 or 45.

At some point in the 2000s I chatted with the SmarterChild chatbot on MSN Messenger for a while. I was around 11 or 12 years old at the time.

I remember it once answering “sorry, web search is only available to adult users”, to which I responded “how do you know I’m just a child?”. I don’t remember what came before or after that… it might have asked me my DOB at some point before that, but am until today not sure how it knew that. Point is, none of this is new… 😁

“You just told me, kiddo!”

So if I don’t use it I’m dead?

The whole idea of software services where the output is not a function of the input, but rather a function of the input plus all the data the service has been able to harvest about you has always been awful. It was awful when google started doing it years ago and it’s awful when LLM frontends do it now. You should be able to know that what you are seeing is what others would see, and have some assurance that you aren’t being manipulated on a personal level.

It’s shocking how much this ‘personalized’ stuff is normalized.

Absolutely. Couldn’t agree more.

I just run everything possible locally which helps a lot. Nearly everything my friends do in “the cloud” I do on my own computer. Even stuff like spreadsheets they want to do in the cloud. It flummoxes me, how willing they are to share everything with big tech.

If you have a mid range or better GPU, you can even run a local LLM. I have used one for language translation. I cannot speak German but I was talking to a German speaker who did not speak English about a hobby. We could talk to each other despite not sharing a language. That’s practically sci-fi to me! I used a sandboxed LLM disallowed from any network access, to do that.

Even so, I am skeptical about most of what I see people use LLMs for. I am afraid of what they will allow bad actors to do. I am afraid of even worse corruption of the information space. I doubt the horse will re-enter the barn tho.

If at all possible, anyone that cares about their privacy should be self hosting.

lemmy.world/c/selfhosted

This will last right up until all the right-wingers get pissed they’re being identified as teenagers based on their 5th grade writing level.

If you absolutely must use ChatGPT, you can do it with Duck.ai as a proxy to protect your personal info.

I have duckduckgo as my default engine. But how to set up this proxy to ChatGPT?

No setup required, just go to duck.ai

So corporations will decide whether you should be forced to surrender commercially valuable personal data to them, that makes them more money. Great system guys! If we can’t trust mega corporations with political connections, who can we trust?

ai;dr

.

Damn, I’m stealing this like I am training an LLM

Just don’t use ChatGPT. I recommend DeepSeek or Qwen:

chat.qwen.ai

www.deepseek.com/en/

I recommend neither.

Just don’t use AI and you’ll be fine

Finally I see a use for AI. In a carnival midway. “I will guess your age for $250”.

No shit

Start asking it questions about what to do with your grandkids, and what sort of music the youth are into, and how you’re supposed to spend all your disposable retirement money.

ChatGPT, hello. This is the first time we have chat. What can I give my wife for our 18th wedding anniversary.

Hello kids, start every conversation with ai like this and you’ll be fine

Mhhhh another FairMail user, a person of culture. I only used it because it was the only OSS client with encryption and signature support

the amount of problems i don’t have to deal with because i simply don’t use GenAI

Until you boss decides to use it.